Exploiting Context in Handwriting Recognition Using Trainable Relaxation Labeling

Abstract

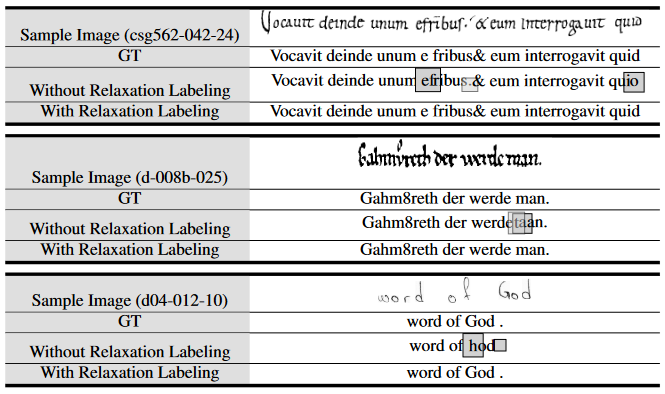

Handwriting Text Recognition (HTR) is a fast-moving research topic in computer vision and machine learning domains. Many models have been introduced over the years, one of the most well-established ones being the Convolutional Recurrent Neural Network (CRNN), which combines convolutional feature extraction with recurrent processing of the visual embeddings. Such a model, however, presents some limitations such as a limited capability to account for contextual information. To counter this problem, we propose a new learning module built on top of the convolutional part of a classical CRNN model, derived from the relaxation labeling processes, which is able to exploit the global context reducing the local ambiguities and increasing the global consistency of the prediction. Experiments performed on three well-known handwritten recognition datasets demonstrate that the relaxation labeling procedures improve the overall transcription accuracy at both character and word levels.